So Floyd, Le Puy du Fou, Orelsan, the Artrock festival, Les Vieilles Charrues, Riles, Trackmania, Maxime Gasteuil: they all put their trust in the Naostage tracking solution, which was developed by a French company five years ago. We spoke to Paul Cales, president and co-founder, about the system’s stability.

I met up with Paul Cales, President of Naostage, at the Espace Martin Luther King in Créteil, for a demo of the K System. This tracking solution for audio, lighting and video professionals continues to amaze us with its particularly ingenious technology and its upcoming features.

We had already met them at ISE 2023 and the team had taken the time to explain the three elements that make up the K System: Kapta, Kore and Kratos. In this article, we wanted to ask the questions that potential users are asking: how long does it take to set up, and is this tracking system really reliable, particularly for use on tour?

Is the K System stable, and why do productions need an AI-based tracking system?

Paul claims a 20-minute installation time. This particularly rapid setup is almost unbelievable, and could even create a degree of anxiety in any user inclined to double-check (or even triple-check) his installation, so that he can sleep soundly at night. I tell him what I think.

Paul Cales : It really takes 20 minutes to get the system up and running. Then comes the stage of parameterizing what we want to do with third-party systems based on the tracking data.

This involves, for example, the lighting programming of the show, with the decisions to track this or that performer and assign him or her a profile that will coordinate the machines.

SLU : The “So Floyd” tour, which is currently under way, uses your system. How long did it take to set up the system, on top of the 20 minutes it takes to install it?

Paul Cales : It took me two hours to set up the whole show. Then I was on site for one of the dates to hand it over to the technicians working on the tour and teach them how to use the system. They’re very happy with it. Indeed, the response from the So Floyd team has been extremely enthusiastic and, as Paul explains, the K System meets a growing need for customization of increasingly interactive and immersive audiovisual effects.

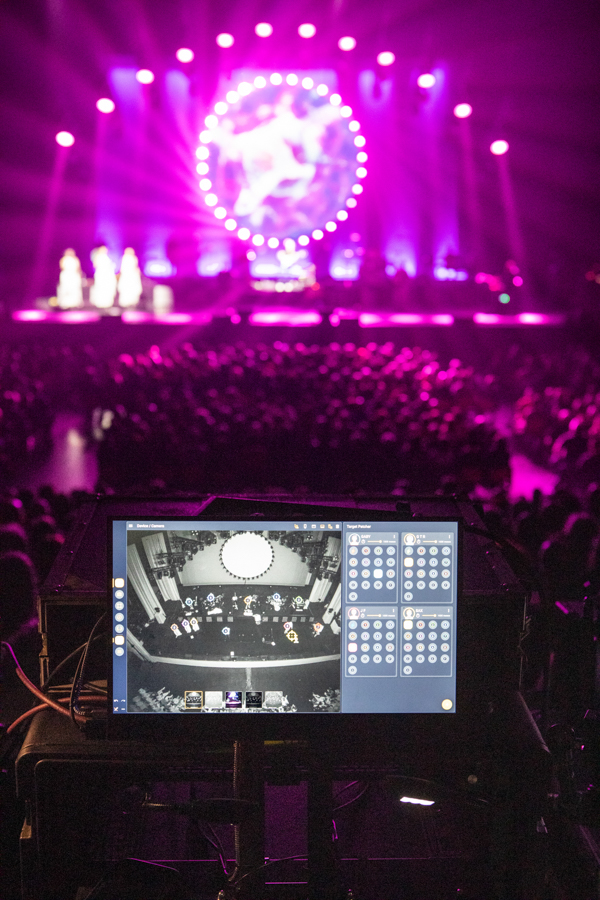

Watch the feedback from Sébastien Huan, lighting, network and tracking technician, and Laurent Begnis, lighting designer and console operator, for the “So Floyd” show.

Paul Cales : We also realized that there was a real lack of tools and that tracking is done manually in 95% of shows by spot operators, who productions are struggling to find, and the Covid period hasn’t helped. Even though these are jobs that require real know-how, there is an urgent need to automate these methods in order to achieve substantial savings in budgets, but above all to enable technicians to be redeployed to other roles for greater creativity.

« “Tracking is done manually in 95% of shows by operators, who productions are struggling to find, and the Covid period hasn’t helped.” Paul Cales, president and founder of Naostage

SLU : The system emphasizes its “beaconless” functionality, while others emphasize the security provided by bodypack or tag coupling. Why this choice?

Paul Cales : Let’s face it, the main purpose of a bodypack or tag is to reassure the wearer: “I’ve got a bodypack, so they can’t lose me…”, but this adds a layer of unnecessary technical complexity, and therefore potentially to failures. We also realized that these systems were restrictive for the performers.

Putting a bodypack or tag on a mannequin for a fashion show isn’t possible, nor is it for conferences with a very large number of speakers, or for live performances and plays, when the performers are already equipped with wireless mic and in-ear monitor bodypacks.

The second point is the cost associated with the installation and calibration of systems that require numerous antennas or cameras to be connected around the stage, not to mention the interference to which the installation will then be vulnerable. So there’s an associated cost that we felt was superfluous, which we wanted to reduce by proposing a different solution.

Installing the K System

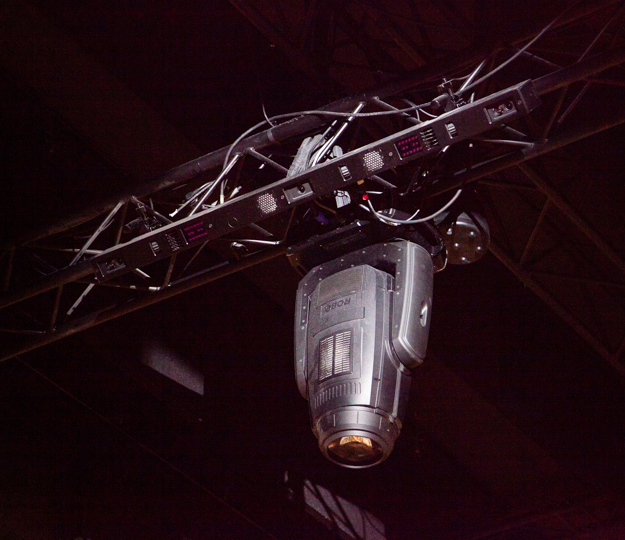

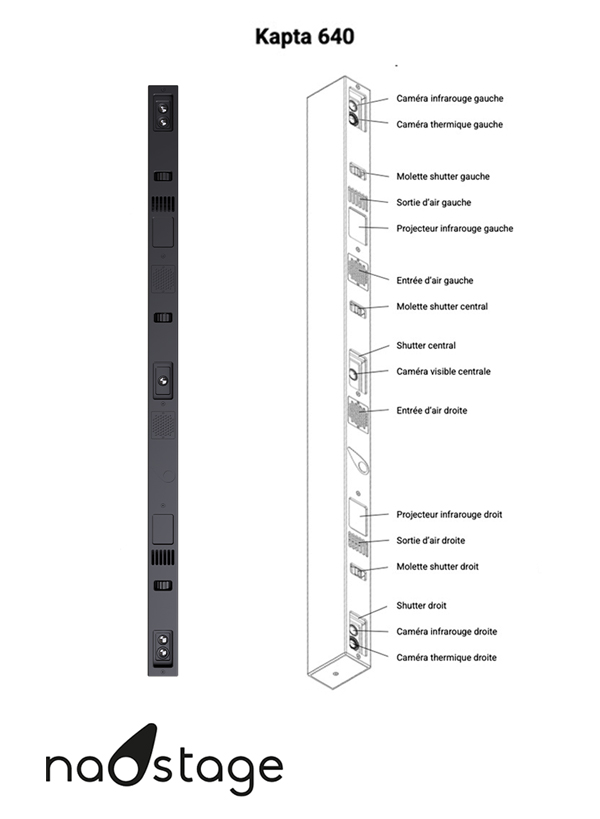

Let’s delve into the details of the K System to get a better understanding of the Kapta tracking unit.

Kapta is the entry point and first element of the K System. It is a sensor composed of five cameras, including two pairs of thermal and near-infrared cameras located on either side to reproduce a kind of interocular distance and obtain a 3D stereo-vision view. These are combined with a visible-spectrum camera in the center. The device thus obtains a sequence of tracking positions with x-y-z coordinates. A very practical system for tracking a performer as they climb a riser or a staircase.

Thermal and infrared cameras work on the same principle: recording the infrared radiation emitted by bodies.

However, a thermal camera is sensitive to waves emitted at wavelengths of the order of ten microns (10-5 m), which are directly related to the temperature of the body producing them.

An infrared camera, on the other hand, is sensitive to waves emitted at micron (10-6 m) wavelengths.

Last but not least, this device is attached to omega brackets with cam lock fasteners. In addition, its layout can be adjusted by means of small yokes that allow it to be flown and then tilted.

SLU : Are there any special features to take into account when installing it?

Paul Cales : You simply need to rig it in such a way that it can pick up the area in which you want to track. It can operate at heights ranging from 5 to 15 meters, for a stage measuring 20 meters by 12 meters, i.e. the size of a stage for a Zenith-size venue with just a single unit.

For Orelsan’s Bercy dates, there was one sensor for the stage and one for the proscenium, which extended into the audience. The maximum distance for tracking a performer without loss of accuracy is between 30 and 40 meters, which is more than enough.

This answer is clear and simple, as is the plug-and-play nature of the Kapta. Power is supplied via a PowerCon, and data is transmitted to the Kore server over the show’s fiber network, to which it is connected via RJ45, an EtherCon plug and a proprietary protocol.

Paul explains, “It’s important to plan for a minimum of 1 Gigabit throughput per Kapta, so as to avoid any loss of data from the five cameras and achieve smooth tracking”.

A video tutorial on how to install the K System:

The second element, Kore, is a classic 4 rack-unit server (i.e. the dimensions of a classic media server). It features two network cards, one to receive tracking information from one or two Kaptas (in case of a larger coverage area), and the other to send data to third-party systems.

“Putting a bodypack or a tag on a mannequin for a fashion show isn’t possible, and neither is a conference with a very large number of speakers.” Paul Cales

Also, the Kore server integrates an artificial intelligence that processes the Kapta data to detect, track and identify performers present in the area covered in 3D. “Today, we limit it to 16 individuals simultaneously per server in live show mode, but for interactive and immersive installations, the server can be expanded to track the public,” explains Paul.

He continues: “This intelligence, which we also call a neural network, has been trained by Deep Learning on hundreds of shows at festivals, concerts, plays, theme parks and events, where we installed sensors to collect data and learn how to identify and track people under real industry conditions. This training is still ongoing, to cover all possible cases and make the AI even more robust and efficient, as in cases where there are a lot of special effects (pyrotechnics, smoke, etc.)”.

SLU : How long does this training process take?

Paul Cales : You have to understand that training AI is not a question of quantity, but rather a question of the quality of the data you give it as input. That’s why this phase is carried out in-house at Naostage. For “Le Mime et l’Etoile” at the Puy du fou, it took one night, and we can also carry out this process during general rehearsals in residence, to cover any possible scenarios.

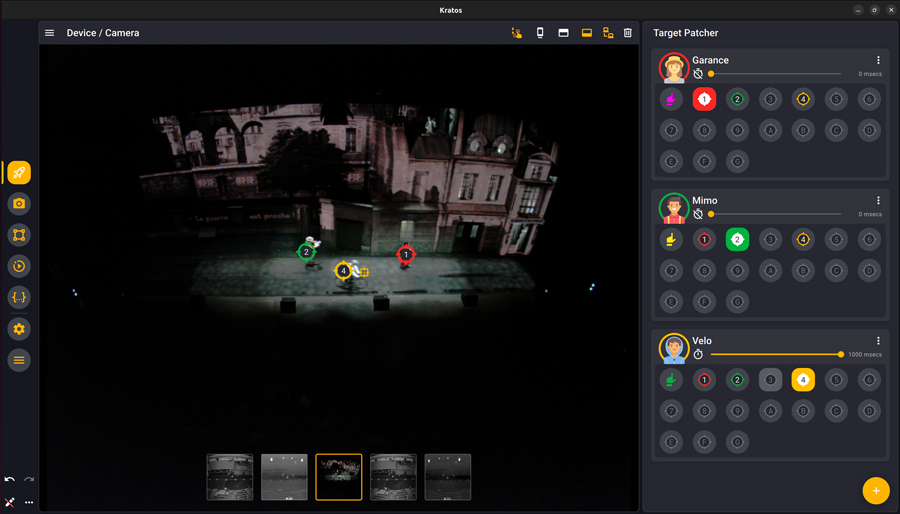

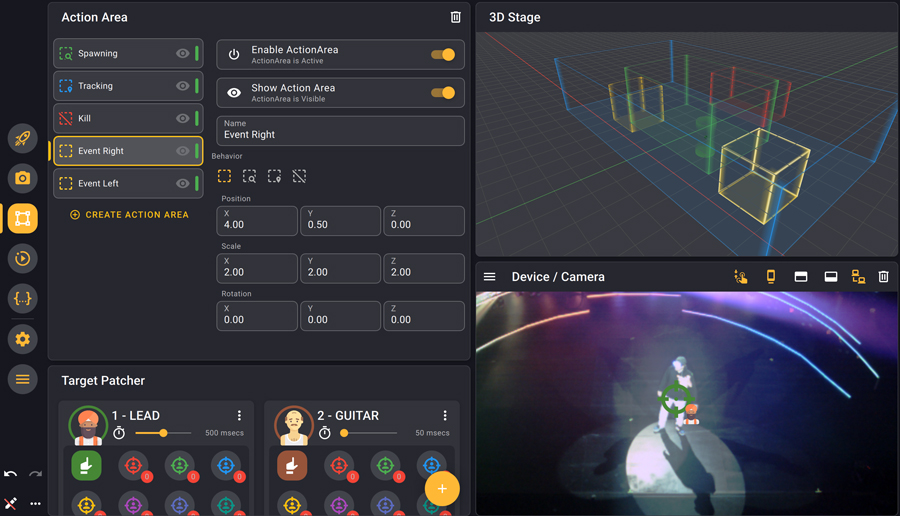

The Kratos software, the third and final element, displays the various camera views (visible spectrum, thermal, infrared), a 3D scene and the patcher for assigning subsequent detections to programming parameters.

Once the system has been installed and connected, the calibration phase begins, to provide a common reference frame for the third-party systems in the 3D space in which you intend to work.

To define this reference frame, you need to mark a 6-point rectangle on the ground and define its actual dimensions. Please note that this has nothing to do with the tracking zone; it’s just a rectangular x-y-z reference frame. The calibration phase ends here, and the system is up and running in 20 minutes, as promised by the manufacturer.

How it’s used

The system can interact with third-party systems such as consoles, media servers or spatialized sound systems. Entering the field triggers a detection which must then be assigned to a target by an operator in the “Target patcher”. It is also possible to activate the auto-assign function, which automatically assigns a detection to a target in increments. The console then receives from the server and outputs its programming.

Among the target parameters, the “prediction” slider can be modified. This means that, depending on the tracked person’s speed and acceleration, the Kore can send a “Predicted position” to the console, to compensate for the mechanical inertia of the motors on the moving lights, for example, and keep the subject in the beam.

Paul adds: “It works like a followspot operator who foresees a dancer’s movements”. This is a useful parameter for giving the beam more or less inertia, depending on the situation. Last but not least, the operator can take control of the system from the Kratos software with his mouse or directly on a touch screen.

It can interface with third-party systems and create automations for certain actions, accessible from an “action bank” or by coding in Javascript (like triggering an effect when a performer enters a zone, turning on a certain effect, cutting the performer’s microphone when he leaves the stage, etc.).

To trigger these actions, we assign them to “action areas”. There are four types of area:

– Green, where the artificial intelligence is authorized to detect people.

– Blue, where anyone detected will be followed.

– Red, where subjects are no longer tracked or detected.

– Yellow, for triggering an action when the zone is crossed.

SLU : A show can be divided into chapters, so how do events follow one another chronologically?

Paul Cales : You can define thematic action groups and activate them as you go along. What’s more, another module called KratOSC allows you to control all Kratos elements via OSC. So you can use a Q-Lab, which is a show control system for a time-coded show, and activate/deactivate zones and actions as the show progresses.

The OSC allows messages to be received for two-way communication between systems. A real tool for show programming and automation that enables every technical department (audio, lighting, video, effects etc.) to create complex scenarios.

The BeaKon radio tags supplement visual tracking and the “Light Patcher”.

To meet their customers’ needs, BeaKon radio tags will be officially launched this autumn to supplement the visual detection. However, Paul Cales confirms that they are already available for use. They enable continuous identification and tracking outside the vision zone. This is a truly interesting option for shows requiring complete automation of the system, as it is planned without an operator.

“Pairing them with visual detection allows us to bypass any difficulties that might arise due to radio interference,” explains Paul. We can only applaud the comprehensive features of this solution, which, thanks to a particularly responsive and creative team, is as attuned as possible to the evolving needs of the industry.

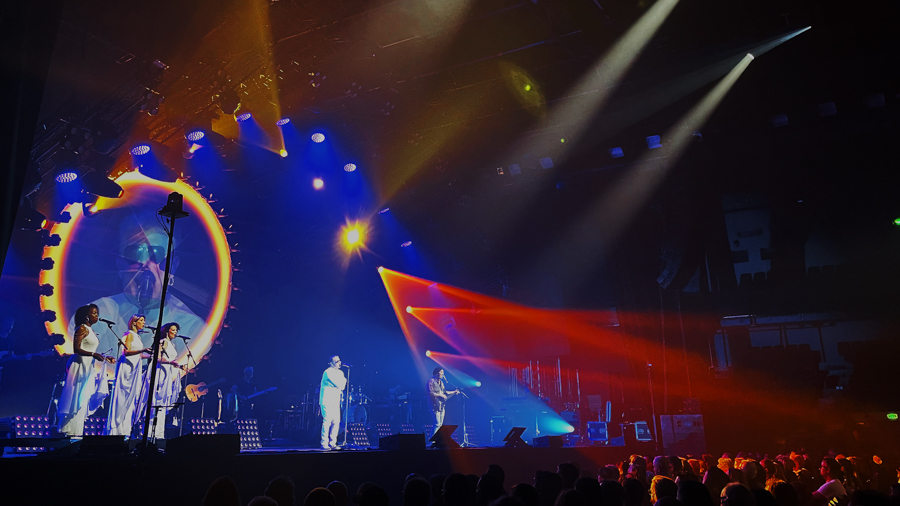

So Floyd

A photo gallery of the So Floyd tour currently underway in France, using the Naostage tracking solution to manage followspots.

It’s a new system that raises questions about the absence of physical tags and about its subjects, who sometimes operate in extreme conditions (fog, effects). The Naostage team is well aware of this, and in response has come up with a particularly innovative and intensively-trained AI to deal with any eventuality, just as a followspot operator might do.

Installation time is significantly reduced, and only one operator is needed to track up to 16 or even 32 targets simultaneously. Kapta, Kore and Kratos, on the other hand, can create an unprecedented multiple-beam effect on a single target.

This protocol communicates unilaterally with third-party systems such as consoles and media servers, but also offers OSC dialogue. But the system goes even further, with detailed programming possibilities for controlling lighting fixtures, PTZ cameras, LED panels or spatialized sound systems.

From musicals to virtual studios, from live festival shows to conferences, from concerts to theme parks, all applications are covered by this remarkable technological breakthrough, which we are honoring with an SLU Innovation Award.

For more information on :

– Naostage or to book a demo

– Facebook

– Instagram

– Linkedin

– Youtube